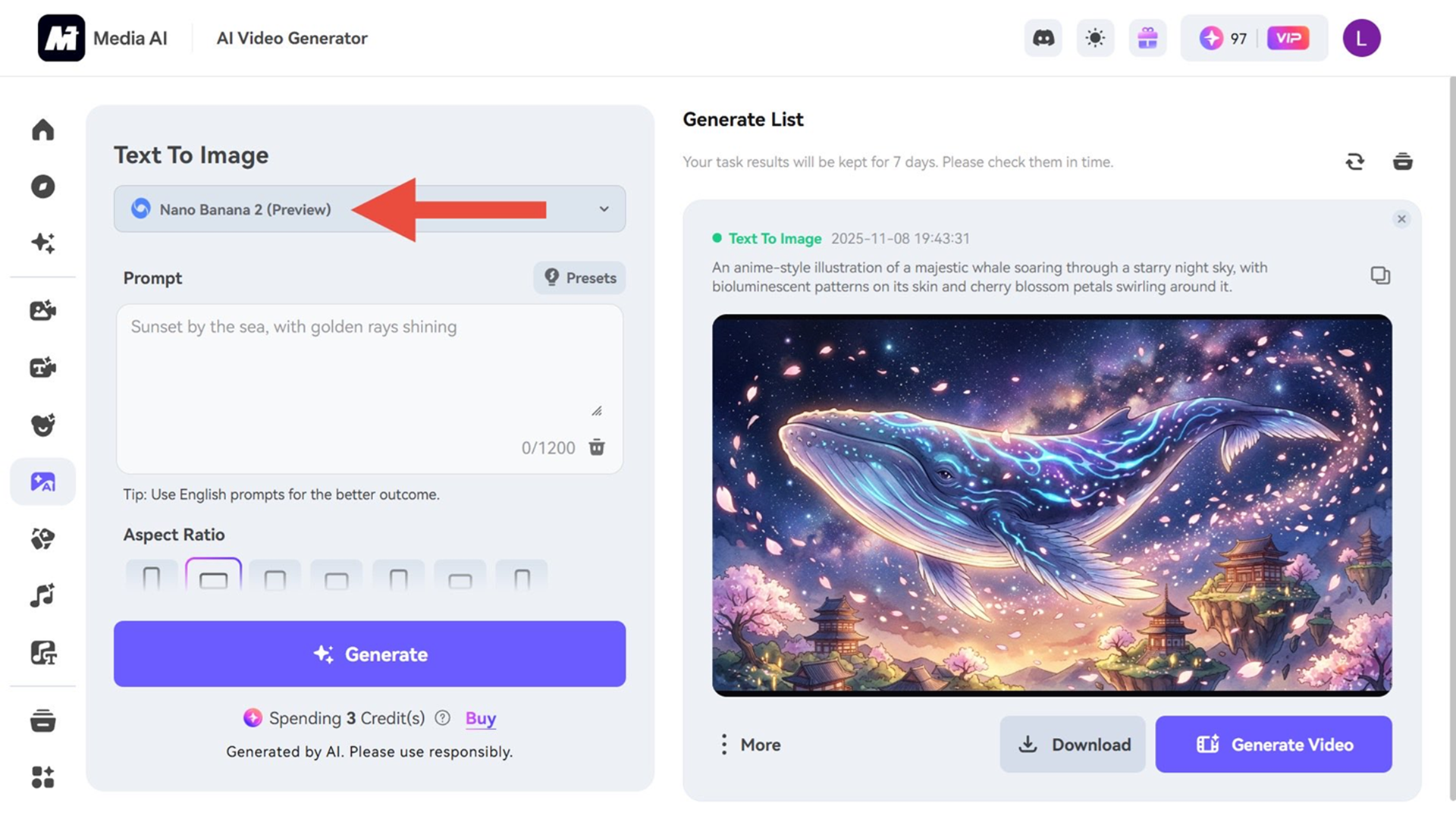

Google is preparing to unveil Nano Banana 2, the next evolution of its AI image generator, as part of its Gemini app. Leaked previews shared on X hint at a major leap — not just in resolution, but in how the AI “thinks” and refines its work.

According to early previews, Nano Banana 2 introduces a multi-step, human-style workflow. The AI will first plan the image, then analyze and correct its mistakes in several loops before producing the final version. This self-correction feature marks a major shift from traditional, one-shot AI image models.

By simulating the way humans visualize, refine, and finalize creative work, Google’s new system aims to deliver images that feel more intentional and natural.

Enhanced control and realistic results

Nano Banana 2 also enhances control over angles, viewpoints, and color precision, giving users the ability to achieve more accurate visual perspectives. One standout improvement is the ability to edit text within an image without altering the rest of the scene — a challenge most AI image tools still struggle with.

These refinements promise a more reliable creative experience, where users get images closer to their intended vision without repeated prompts.

From viral hype to real-world use

The first Nano Banana model gained viral attention for its ability to create lifelike “action figure” versions of real people. With Nano Banana 2, Google seems poised to take that realism even further. Early shared samples show consistent character likeness and natural scene coherence — suggesting the model could blur the line between real and AI-generated imagery even more.

What makes Nano Banana 2 stand out is its self-awareness in image creation. Instead of reacting to feedback, it proactively detects and fixes issues on its own. This represents a significant philosophical shift for Google’s AI research — moving closer to an AI that can learn to think before acting, rather than just respond to user inputs.